Day 7 in the Advent of Cyber 2023. To take revenge for the company demoting him to regional manager during the acquisition, Tracy McGreedy installed the CrypTOYminer, a malware he downloaded from the dark web, on all workstations and servers. Even more worrying and unknown to McGreedy, this malware includes a data-stealing functionality, which the malware author benefits from. The malware has been executed, and now, a lot of unusual traffic is being generated. What's more, a large bandwidth of data is seen to be leaving the network.

WARNING: Spoilers and challenge-answers are provided in the following writeup.

Official walk-through video is as well available at Youtube - InsiderPhD.

Day 7 - 'Tis the season for log chopping!

Most systems generates logs. These tells a story about what have happened within a specific system - and sometimes a specific module or part of a system. For example a webserver or proxy-server we see information related to requests performed via the system. Taking a look on one of the common proxy-systems, Squid Web Cache, we can observe the assess.log file having log entries as lines in for format:

[2023/10/25:15:42:02] 10.10.120.75 sway.com:443 GET / 301 492 "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/118.0.0.0 Safari/537.36"When analyzing these files, we can in Linux interpret the file with "columns" or "fields" - normally devided by a space. Though be aware that some fields can contain spaces within the data. The above log-line would be interpreted as follows. See more about the log format at the website.

| Field | Value | Description |

|---|---|---|

| Timestamp | [2023/10/25:15:42:02] |

The date and time when the event happened. |

| Source IP | 10.10.120.75 |

The source IP that initiated the HTTP request. |

| Domain and Port | sway.com:443 |

The requested domain and port as this is a proxy-logging. |

| HTTP Method | GET |

The HTTP Method. |

| HTTP URI | / |

The HTTP request URI. |

| Status Code | 301 |

HTTP status code send by the server. |

| Size | 492 |

The size of the object returned to the client. |

| User Agent | "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/118.0.0.0 Safari/537.36" |

The User Agent sent in the request by the client. |

The Challenge

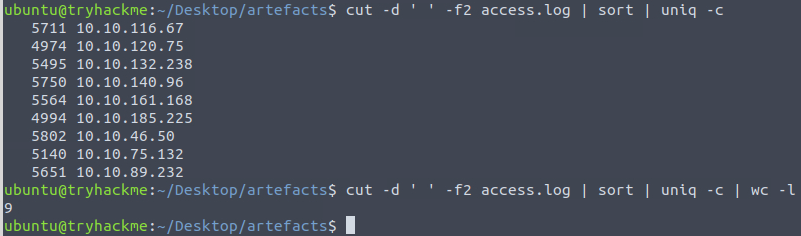

In the challenge we're provided with a Ubuntu machine containing an access.log file from the Squid proxy-server that we're going to investigate. First question is to figure out how many unique IP addresses are connected to the proxy-server. To answer this, we firstly use the Linux command cut to split the file over the different fields and only use the second field which contains the IP addresses. We then pipe the result over to a sorting command and then remove all adjacent lines that are equal to get an output of unique addresses. For good measure the finish by piping the result into the command wc -l which counts the number of lines and by that the answer to the question.

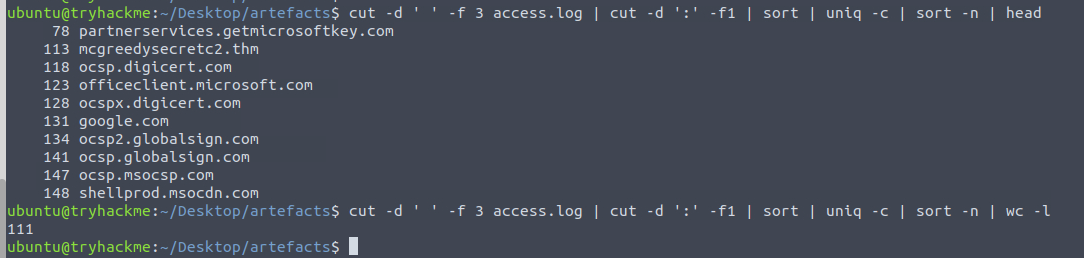

The next question is about the amount of unique domains accessed by all workstations. We again cut the logfile by its fields and this time continue with the third field containing the domains. But as that field as well contains the port used, we use a cut command again, this time to cut on the delimiter : and only piping the domain field to the next stage. We then do a sorting step again so that we can use the uniq -c to remove redundant lines and add a count at the front of the line, and finally a lines-count again giving us the answer requested.

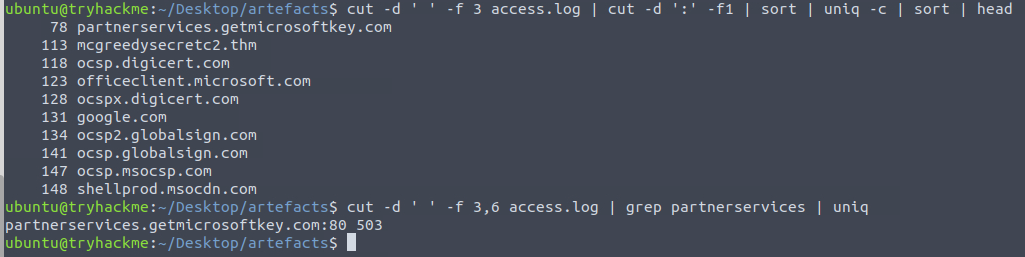

We now need to figure out the status code generated by the HTTP requests to the least accessed domain. Using the first few stages from the last search again, and we can see the least accessed domain partnerservices.getmicrosoftkey.com with only 78 requests. Taking this information, we then create a new command cutting out the third and sixth field (domain and status code) from the logfile and use grep command to search for the domain and for good measure pipe the result to the uniq command to only get one result-line.

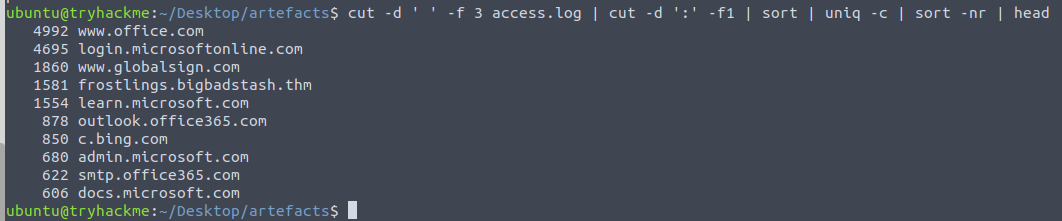

So, the next question for us to investigate is finding a suspicious domain with a high count of connection attempts. Finding the answer to this requires us to again cut the logfile and carve out the domain/port field and further clean up by only preserving the domains. We then sort and clean up for uniqueness with the -c parameter so that we prepend each unique domain-line with the total count for each. We then finish by sorting in reverse order to get the most accessed domains first and use the head command to only see the first few lines of the output (it defaults to 10 lines). Here we see some common domains where frostlings.bigbadstash.thm seems a bit suspicious.

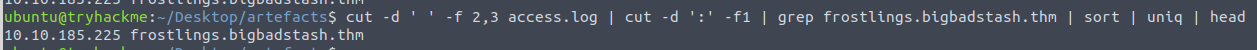

Next question we now need to figure which local workstation accessed the malicious domain we just found. For this, we need to carve out the domain and IP address from the logfile - these are located in the second and third fields. Again we pipe this into another cut as to remove the ports. We then search for all the lines with the malicious domain via grep, sort the output, remove redundant lines and output the first few lines.

We are then tasked to find how many requests have been made to the malicious domain in total. But as we introduced the count in the uniq command at the question before where we found the domain - we have already found that there in total have been 1581 requests to that domain.

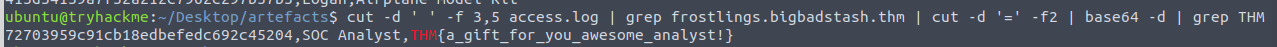

Lastly, we need to find the flag within the logfile. We're informed that it is part of the exfiltrated data, so we do again start with extracting the domain field but also field 5 which contains the URI for the requests. We then search with grep for the malicious domain as this is the only data relevant and then cut on the = to only get the data used in the value of the get parameter that is used. We then see that this data seems to be encoded - usually with base64, so we decode all the lines with the base64 -d command and use another search via grep to find a line with the common flag-format.